Machine learning (ML) isn’t just for powerful computers anymore. With TensorFlow Lite for Microcontrollers (TFLM), we can run tiny ML models on low-power devices like the ESP32, enabling real-time intelligence at the edge—no cloud needed!

What is TensorFlow Lite for Microcontrollers?

TensorFlow Lite for Microcontrollers is a lightweight version of TensorFlow designed specifically to run machine learning models on devices with limited memory and processing power, such as the ESP32.

Key features include:

-

- Lightweight and optimized for microcontrollers

- Can run with less than 100 KB RAM

- Supports common ML operations like fully connected layers and convolution

Requirements

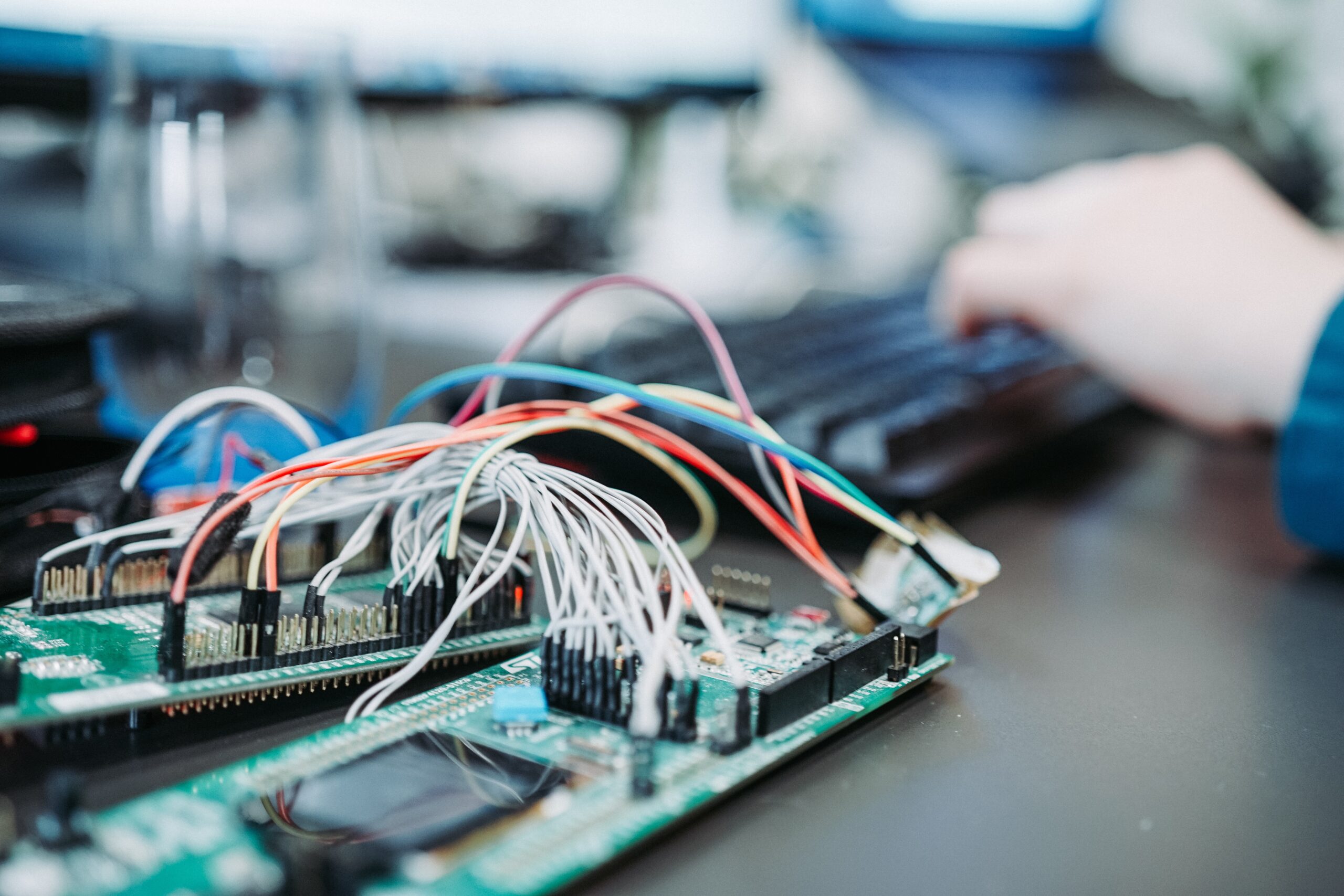

Hardware

-

- ESP32 Development Board

- Microphone Module (e.g., INMP441, MAX9814)

- Micro USB Cable

- (Optional) LED

Software

-

- Arduino IDE

- ESP32 Board Package

- TensorFlow Lite Micro Arduino Library

- Python (optional, for custom model preparation)

Setting Up the Environment

1. Install Arduino IDE

2. Install ESP32 Board Support

-

- Go to File → Preferences, and add the URL: https://raw.githubusercontent.com/espressif/arduino-esp32/gh-pages/package_esp32_index.json

- Install via Tools → Board → Board Manager → ESP32.

3. Install TensorFlow Lite Library

-

- Navigate to Sketch → Include Library → Manage Libraries.

- Search and install the Arduino_TensorFlowLite library.

Practical Example: Voice Activity Detection (VAD)

This project demonstrates detecting voice activity using TensorFlow Lite on ESP32.

Step 1: Download and Prepare the Model

Download the pre-trained model: Download Model (audio_preprocessor.tflite)

Convert the downloaded model into a C source file (speech_model_data.cc) using the following command:

with open("audio_preprocessor.tflite", "rb") as f: data = f.read() with open("speech_model_data.cc", "w") as f: f.write("const unsigned char micro_speech_model_data[] = {") for i, byte in enumerate(data): if i % 12 == 0: f.write("\n ") f.write(f"0x{byte:02x}, ") f.write("\n};\nconst int micro_speech_model_data_len = ") f.write(f"{len(data)};\n")

Step 2: Arduino Sketch (Main Code)

Open Arduino IDE and create a new sketch. Copy this code into your Arduino sketch file.

Step 3: Real Audio Input (Optional)

To incorporate a microphone:

-

- Use I2S to read microphone data (e.g., INMP441).

- Convert audio data into a log Mel spectrogram.

- Feed real audio input instead of the dummy data.

You can use libraries such as ArduinoFFT for FFT computation.

Application Possibilities

-

- Smart Speakers

- Voice-controlled Devices

- Noise-level Monitoring Systems

Next Steps and Further Exploration

-

- Train custom models easily with Edge Impulse.

- Implement network capabilities with ESP32 Wi-Fi.