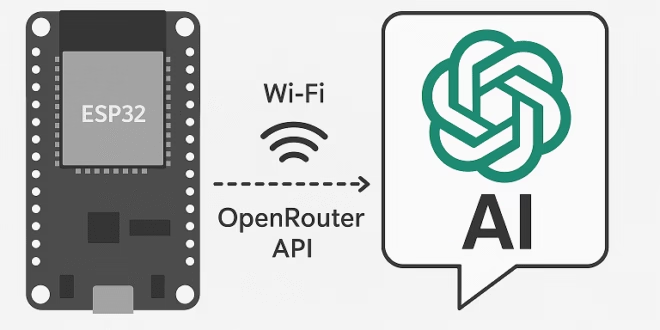

Large Language Models (LLMs) like ChatGPT are usually something you access from a laptop or phone. But what if your humble ESP32 could send a question over Wi-Fi and get an answer back? That’s what we’ll build in this tutorial. We’ll make the ESP32 query an LLM through the OpenRouter API, and print the response on Serial. We’ll cover two approaches:

- Direct: ESP32 → OpenRouter API

- With Proxy: ESP32 → Python Flask Server → OpenRouter API

By the end, you’ll have an ESP32 that can “talk” to AI — and you’ll understand which setup is best for demos vs real projects.

What is OpenRouter?

OpenRouter is a service that lets you use many different AI models (OpenAI, Anthropic, Mistral, LLaMA, etc.) with just one API. Instead of juggling keys and endpoints, you use a single chat completions endpoint:

- Authorization: Bearer <API_KEY>

- Optional: HTTP-Referer and X-Title headers (to identify your app)

- JSON body with model, messages, etc.

The response looks like OpenAI’s API: a JSON with choices[0].message.content.

Approach A: ESP32 Directly to OpenRouter

This is the “bare minimum” setup: the ESP32 connects to Wi-Fi and makes an HTTPS POST request.

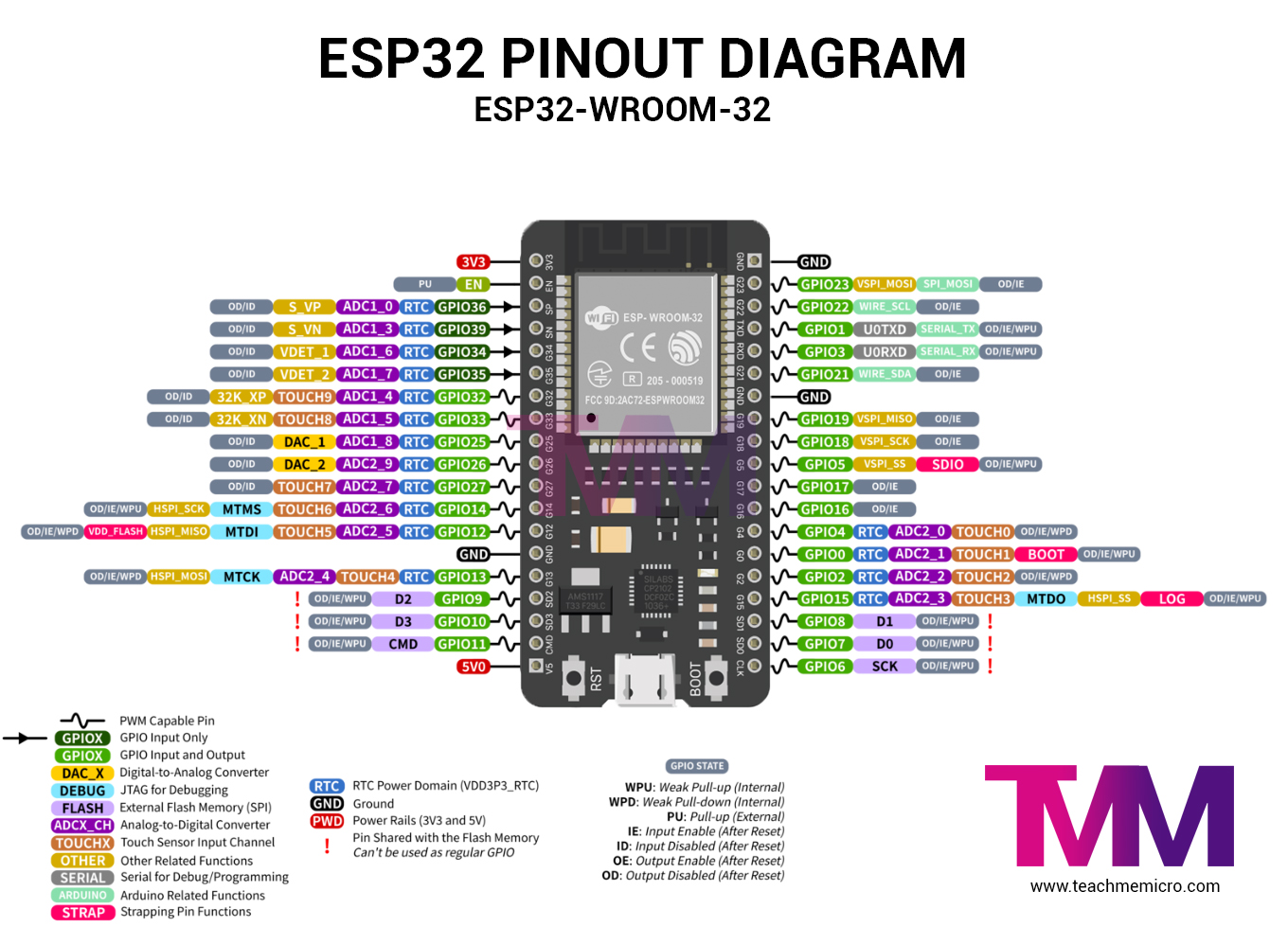

Hardware Needed

- Any ESP32 development board

- Wi-Fi connection

- Arduino IDE

Sketch

Things to Note

- API key is on the device. Anyone who gets your ESP32 could extract it.

- TLS certificates. I used setInsecure() for demo. Properly, you should embed OpenRouter’s root CA.

- Memory. ESP32 RAM is small. Don’t ask for long essays; keep max_tokens low.

- Parsing JSON. The full response is big. Use ArduinoJson filters if you only need choices[0].message.content.

This works fine for demos and lab projects. But for real-world apps, we need more control.

Approach B: ESP32 → Python Proxy → OpenRouter

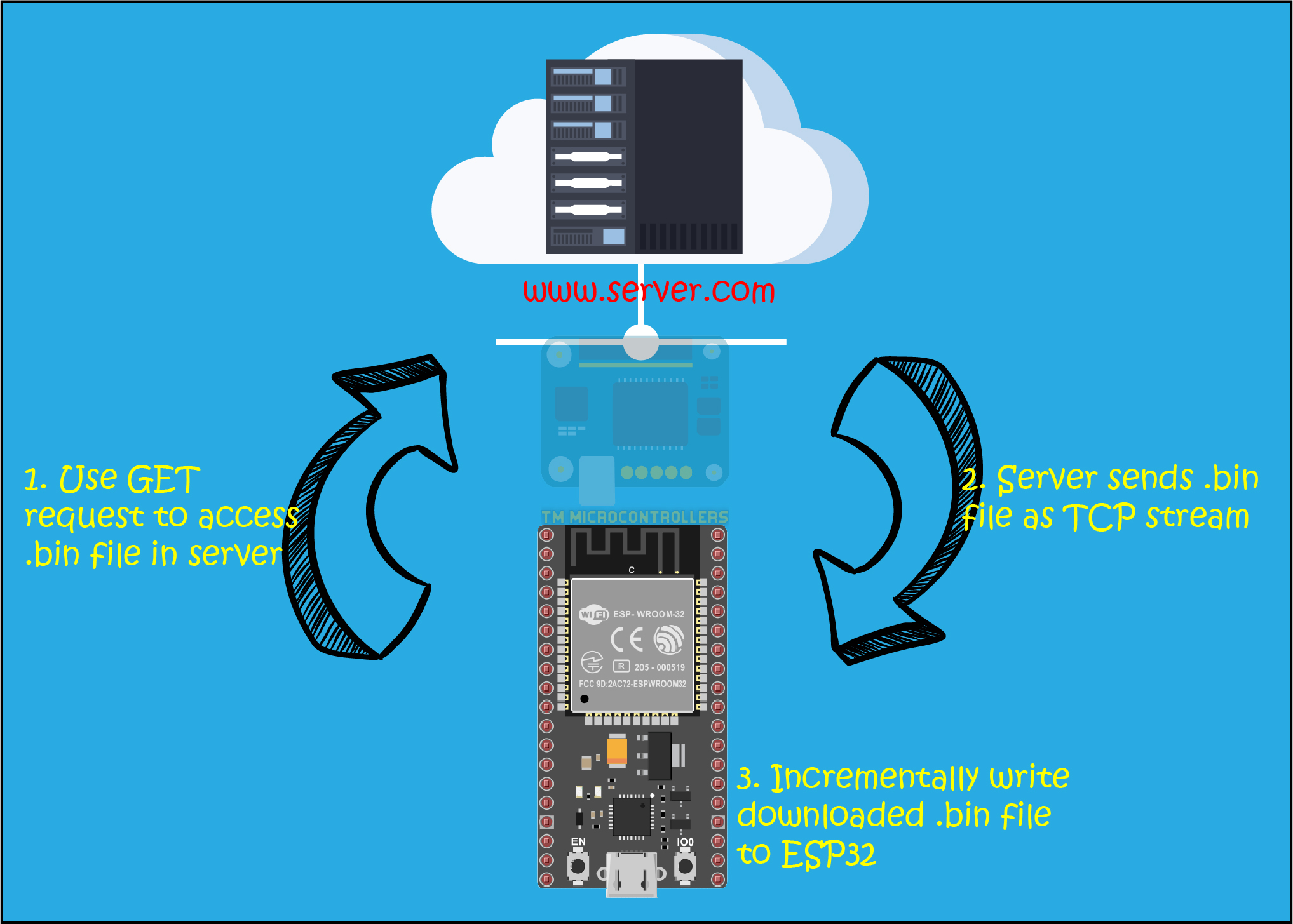

Here, the ESP32 doesn’t talk to OpenRouter directly. Instead, it calls a tiny Python Flask server you run on your PC, Raspberry Pi, or VPS. The proxy calls OpenRouter, trims the answer, and returns a small JSON.

Why bother?

- No API key on the ESP32.

- Responses are small and easy to parse.

- You can switch models or preprocess answers without touching the ESP32.

Setting Up the Python Flask Proxy on Windows

We’ll create a tiny Flask server that your ESP32 can call on your home network. These steps use Windows 10/11, PowerShell, and Python 3.10+.

1) Install Python (and add to PATH)

- Download Python from python.org → Downloads → Windows.

- Run the installer and tick “Add python.exe to PATH.”

- Verify in PowerShell:

If either command is not found, sign out/in or reboot.

2) Make a project folder

Open PowerShell and create a folder anywhere (e.g., Documents):

cd $HOME\Documents mkdir esp32-llm-proxy cd esp32-llm-proxy

3) Create and activate a virtual environment

A venv keeps your dependencies clean and local to the project.

If you get an execution policy error, run PowerShell as Administrator once:

Set-ExecutionPolicy RemoteSigned

Then retry Activate.ps1.

4) Add the three files

Create requirements.txt, .env, and server.py in the folder.

requirements.txt

server.py

Test from your PC:

curl -X POST http://localhost:3000/ask -H "Content-Type: application/json" -d '{"q":"Say hi!"}'

Open Serial Monitor @ 115200. You should see the tiny JSON:

{"text":"Hi from the LLM!"}

Pros and Cons

Direct (ESP32 → OpenRouter)

✔ Simple, no server

✔ One less hop

✘ API key in firmware

✘ Large JSON responses

✘ Hard to change models without reflashing

Proxy (ESP32 → Flask → OpenRouter)

✔ API key stays safe

✔ Tiny, clean responses

✔ Easy to swap models or add features (caching, logging, RAG)

✘ Requires hosting a server

✘ One extra hop

When to Use Which

- For a classroom demo or quick experiment: Direct is fine.

- For anything serious (multiple devices, production, or publishing a project): Use a proxy.

Final Thoughts

This project shows how the ESP32 — a microcontroller with just a few hundred KB of RAM — can still talk to cutting-edge AI models. Thanks to OpenRouter, you can choose models freely without rewriting code.

Start with the direct approach if you just want to see “Hello from ESP32” echoed back by an AI. But if you plan to make an AI gadget (like a smart display or voice assistant), invest the extra step: build a proxy. It makes your setup more secure, scalable, and flexible.